Apache Spark | Setup Spark in local

In this post we are going to setup Apache Spark in Ubuntu machine. Apache Spark is high performance engine for Big data such as batches, and streaming of data. Spark provides up-to 100x times speed than any other engines. Spark is compatible with Java, Python, R.

Step 1 : Download Spark installations from website.

This will download spark-2.3.1-bin-hadoop2.7.tgz in your local. Extract files from zip as below.

rohan@rohan:/opt/spark$tar -xvzf spark-2.3.1-bin-hadoop2.7.tgz

rohan@rohan:/opt/spark$cd spark-2.3.1-bin-hadoop2.7

rohan@rohan:/opt/spark/spark-2.3.1-bin-hadoop2.7$ ll

total 124

drwxrwxr-x 15 rohan rohan 4096 Aug 4 17:08 ./

drwxrwxr-x 5 rohan rohan 4096 Jul 27 12:49 ../

drwxrwxr-x 4 rohan rohan 4096 Aug 4 17:01 bin/

drwxrwxr-x 2 rohan rohan 4096 Aug 4 17:12 conf/

drwxrwxr-x 5 rohan rohan 4096 Jun 1 22:49 data/

drwxrwxr-x 4 rohan rohan 4096 Jun 1 22:49 examples/

drwxrwxr-x 2 rohan rohan 12288 Jun 1 22:49 jars/

drwxrwxr-x 3 rohan rohan 4096 Jun 1 22:49 kubernetes/

-rw-rw-r-- 1 rohan rohan 18045 Jun 1 22:49 LICENSE

drwxrwxr-x 2 rohan rohan 4096 Jun 1 22:49 licenses/

drwxrwxr-x 2 rohan rohan 4096 Aug 4 17:13 logs/

-rw-rw-r-- 1 rohan rohan 24913 Jun 1 22:49 NOTICE

drwxrwxr-x 8 rohan rohan 4096 Jun 1 22:49 python/

drwxrwxr-x 3 rohan rohan 4096 Jun 1 22:49 R/

-rw-rw-r-- 1 rohan rohan 3809 Jun 1 22:49 README.md

-rw-rw-r-- 1 rohan rohan 161 Jun 1 22:49 RELEASE

drwxrwxr-x 2 rohan rohan 4096 Jun 1 22:49 sbin/

drwxrwxr-x 7 rohan rohan 4096 Aug 4 17:47 work/

drwxrwxr-x 2 rohan rohan 4096 Jun 1 22:49 yarn/Step 2 : Create Slaves config ( Worker nodes)

Spark provides high performance by dividing work between worker nodes. One node act as Master node(Driver node) and 1..N worker nodes(Slave nodes).

Add below changes to spark-env.sh file under conf dir.

SPARK_WORKER_CORES=2

SPARK_WORKER_MEMORY=2g

Here we are using 2 CPU cores and assigning 2g memory per worker.

Now go to sbin/ dir.

rohan@rohan:/opt/spark/spark-2.3.1-bin-hadoop2.7/sbin$ ll

total 100

drwxrwxr-x 2 rohan rohan 4096 Jun 1 22:49 ./

drwxrwxr-x 15 rohan rohan 4096 Aug 4 17:08 ../

-rwxrwxr-x 1 rohan rohan 2803 Jun 1 22:49 slaves.sh*

-rwxrwxr-x 1 rohan rohan 1429 Jun 1 22:49 spark-config.sh*

-rwxrwxr-x 1 rohan rohan 5689 Jun 1 22:49 spark-daemon.sh*

-rwxrwxr-x 1 rohan rohan 1262 Jun 1 22:49 spark-daemons.sh*

-rwxrwxr-x 1 rohan rohan 1190 Jun 1 22:49 start-all.sh*

-rwxrwxr-x 1 rohan rohan 1274 Jun 1 22:49 start-history-server.sh*

-rwxrwxr-x 1 rohan rohan 2050 Jun 1 22:49 start-master.sh*

-rwxrwxr-x 1 rohan rohan 1877 Jun 1 22:49 start-mesos-dispatcher.sh*

-rwxrwxr-x 1 rohan rohan 1423 Jun 1 22:49 start-mesos-shuffle-service.sh*

-rwxrwxr-x 1 rohan rohan 1279 Jun 1 22:49 start-shuffle-service.sh*

-rwxrwxr-x 1 rohan rohan 3151 Jun 1 22:49 start-slave.sh*

-rwxrwxr-x 1 rohan rohan 1527 Jun 1 22:49 start-slaves.sh*

-rwxrwxr-x 1 rohan rohan 1857 Jun 1 22:49 start-thriftserver.sh*

-rwxrwxr-x 1 rohan rohan 1478 Jun 1 22:49 stop-all.sh*

-rwxrwxr-x 1 rohan rohan 1056 Jun 1 22:49 stop-history-server.sh*

-rwxrwxr-x 1 rohan rohan 1080 Jun 1 22:49 stop-master.sh*

-rwxrwxr-x 1 rohan rohan 1227 Jun 1 22:49 stop-mesos-dispatcher.sh*

-rwxrwxr-x 1 rohan rohan 1084 Jun 1 22:49 stop-mesos-shuffle-service.sh*

-rwxrwxr-x 1 rohan rohan 1067 Jun 1 22:49 stop-shuffle-service.sh*

-rwxrwxr-x 1 rohan rohan 1557 Jun 1 22:49 stop-slave.sh*

-rwxrwxr-x 1 rohan rohan 1064 Jun 1 22:49 stop-slaves.sh*

-rwxrwxr-x 1 rohan rohan 1066 Jun 1 22:49 stop-thriftserver.sh*

Step 3 : Run Master

We need to run master which will assign work to each worker node.rohan@rohan:/opt/spark/spark-2.3.1-bin-hadoop2.7/sbin$ ./start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/spark/spark-2.3.1-bin-hadoop2.7/logs/spark-rohan-org.apache.spark.deploy.master.Master-1-rohan-Latitude-E5470.out

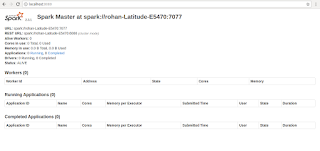

You can go to localhost:8080 and see that status of Spark master.

Start slave node as below. You can edit conf/slaves (slaves.template) to add more slave node. More info on official site.

rohan@rohan:/opt/spark/spark-2.3.1-bin-hadoop2.7/sbin$ ./start-slave.sh spark://rohan-Latitude-E5470:7077

starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/spark-2.3.1-bin-hadoop2.7/logs/spark-rohan-org.apache.spark.deploy.worker.Worker-1-rohan-Latitude-E5470.ou

Now we have successfully setup spark in our machine. In next post we will see how to run first spark program in spark.

Comments

Post a Comment